association rules

What are association rules in data mining?

Association rules are if-then statements that show the probability of relationships between data items within large data sets in various types of databases. At a basic level, association rule mining involves the use of machine learning models to analyze data for patterns, called co-occurrences, in a database. It identifies frequent if-then associations, which themselves are the association rules.

For example, if 75% of people who buy cereal also buy milk, then there is a discernible pattern in transactional data that customers who buy cereal often buy milk. An association rule is that there is an association between buying cereal and milk.

Different algorithms use association rules to discover such patterns within data sets. These algorithms are capable of analyzing big data sets to discover patterns. Artificial intelligence (AI) and machine learning are being used to enable algorithms and their related association rules to keep up with the large volumes of data being generated today.

Why are association rules important?

Various vertical markets use these algorithms in different ways. The fundamental patterns and associations between data points discovered using association rules shape how businesses operate. For example, association rule mining is used to help discover correlations between suspicious and normal transactions in transactional data or disease and healthy patterns in medical data sets.

These rules and the algorithms they apply expedite and simplify large-scale analyses that are impossible for people to accomplish without sacrificing productivity. They affect the work of nontechnical professionals, as well as technical ones. A marketing team could use association rules on customer purchase history data to better understand which customers are most likely to repurchase. A cybersecurity professional might use association rules on an algorithm used to detect fraud and cyberattacks on IT infrastructures.

How do association rules work?

An association rule has two parts: an antecedent (if) and a consequent (then). An antecedent is an item found within the data. A consequent is an item found in combination with the antecedent. The if-then statements form itemsets, which are the basis for calculating association rules made up of two or more items in a data set.

Data pros search data for frequently occurring if-then statements. They then look for support for the statement in terms of how often it appears and confidence in it from the number of times it's found to be true.

Association rules are typically created from itemsets that include many items and are well represented in data sets. However, if rules are built from analyzing all possible itemsets or too many sets of items, too many rules result, and they have little meaning.

Once established, data scientists and others in fields requiring data analyses apply association rules to uncover important patterns.

What is support and confidence in data mining?

Association rules are created by searching data for frequent if-then patterns and using the criteria support and confidence to identify the most important relationships. Support indicates how frequently an item appears in the data. Confidence indicates the number of times the if-then statement is found to be true. A third metric, called lift, can be used to compare observed confidence with expected confidence, or how many times an if-then statement is expected to be found true.

Two steps are involved in generating association rules. Support and confidence play a crucial role in these steps:

- Identify items that commonly appear in a given data set. Given how frequently certain items appear, set minimum support thresholds to indicate how many times items must appear to undergo step two.

- Look at each itemset that includes the items meeting certain minimum support thresholds. Calculate confidence thresholds that indicate the frequency an association between two items actually occurs. For instance, if two items are matched more than half the time they appear in a data set, that could constitute a simple confidence threshold.

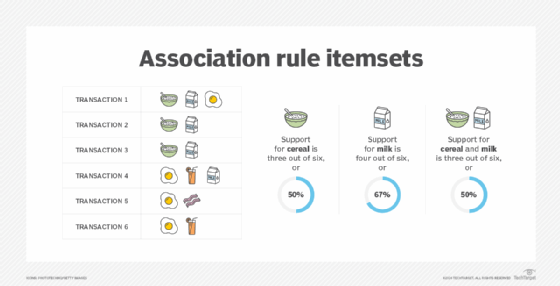

Figure 1 shows how support is calculated for an itemset in a list of transactions. Based on the table, calculating confidence that cereal will be purchased alongside milk starts with taking the support for buying milk and cereal together, or three transactions out of six. That number is then divided by the support for milk, or four transactions out of six. This equals 75% confidence that cereal will be purchased with milk.

Types of association rules in data mining

There are multiple types of association rules in data mining. They include the following:

- Generalized. Rules in this category are meant to be general examples of association rules that provide a high-level overview of what these associations of data points look like.

- Multilevel. Multilevel association rules separate data points into different levels of importance -- known as levels of abstraction -- and distinguish between associations of more important data points and ones of lower importance.

- Quantitative. This type of association rule is used to describe examples where associations are made between numerical data points.

- Multirelational. This type is more in-depth than traditional association rules that consider relationships between single data points. Multirelational rules are made across multiple or multidimensional databases.

What are use cases for association rules?

In data science, association rules are used to find correlations and co-occurrences between data sets. They're used to explain patterns in data from seemingly independent information repositories, such as relational databases and transactional databases. The act of using association rules is sometimes referred to as association rule mining or mining associations.

There are several common areas where association rules are used. They include the following:

- Customer analytics. Association rules are used in customer analytics to analyze and predict customer behavior in areas such as data on buying trends and purchase histories.

- Market basket analysis. Association rules are used in retail to discern which products are frequently bought together.

- Product clustering and store layout. Association rules are used to examine specific data on products, letting retailers group them together based on common attributes.

- Catalog design. When association rules are used to examine customer purchase history, they can inform where products are placed and how they're presented in retailers' catalogs.

- Software development. Machine learning and AI are used to build programs with the ability to become more efficient without being explicitly programmed. These programs often use association rules to carry out large-scale data mining tasks.

- Text mining. In text mining, association rules are used to analyze relationships between words and sentences in large documents to produce new information.

The following examples are real-world use cases for association rules:

- Healthcare. Doctors can use association rules to help diagnose patients. Many diseases have similar symptoms, so there are many variables to consider when making a diagnosis. With the rise of AI in healthcare, doctors can use association rules and machine learning to do data analysis to compare symptom relationships from past cases to determine the probability of a given illness based on a person's current symptoms. As new diagnoses get made, machine learning models can adapt the rules to reflect the updated data.

- Retail. Retailers can collect data about purchasing patterns, recording purchase data as item barcodes are scanned by point-of-sale systems. Machine learning models can look for co-occurrence in the data to determine which products are most likely to be purchased together. The retailer can then adjust its marketing and sales strategy to take advantage of this information. For example, items that are often bought together can be placed near each other, while items that sell less often than others can be considered lower priority when stocking.

- User experience design. Developers can collect data on how consumers use a website. They can then use associations in the data to optimize the website's user interface. For example, they might look at where users tend to click and what maximizes the chance that they engage with a call to action.

- Entertainment. Services such as Netflix and Spotify use association rules in their content recommendation engines. Machine learning models analyze past user behavior data for frequent patterns, develop association rules and use those rules to recommend content that a user is likely to engage with. They can also help organize content in a way that highlights the most interesting content for a given user.

- Finance. In the financial sector, association rules are used to differentiate between legitimate and fraudulent transactions in ways that are more efficient than human employees. For example, an insurance company can use association rules to analyze large data volumes and group anomalies together by attributes to detect a wider pattern of fraud and determine what its source is.

- IT. Cybersecurity professionals use association rules in machine learning for fraud detection and other forms of risk management to prevent cyberattacks.

How to measure of the effectiveness of association rules

The strength of an association rule is measured by support and confidence. Support measures how often the relationship a given rule refers to appears in the database being mined, while confidence refers to the number of times the relationship turns out to be true. A rule may show a strong correlation in a data set because it appears often but may occur far less when applied. This is a case of high support but low confidence.

Conversely, a rule might not stand out in a data set, but continued analysis shows that it occurs frequently. This is a case of high confidence and low support. Using these measures helps data engineers and analysts separate causation from correlation and enables them to properly value a given rule.

A third value parameter, known as the lift value, is the ratio of observed confidence to expected confidence. If the lift value is less than one, then there is a negative correlation between data points. If the value is greater than one, there's a positive correlation. If the ratio equals one, then there is no correlation.

Association rule algorithms

Examples of popular data mining algorithms that use association rules include the following:

- AIS. This algorithm generates and counts itemsets as it scans data. In transaction data, AIS determines which large itemsets contain a transaction. New candidate itemsets are created by extending the large itemsets with other items in the transaction data.

- Apriori. The Apriori algorithm takes an iterative approach, where it scans a database to apply association rules, focusing on identifying large itemsets with subsets of similar features as potential candidates for these rules. Itemsets that aren't large are discarded, and the remaining itemsets are the candidates the algorithm considers. The Apriori algorithm considers any subset of a frequent itemset to also be a frequent itemset. With this approach, the algorithm reduces the number of candidates being considered by only exploring itemsets with support counts greater than the minimum support count.

- SETM. This algorithm also generates candidate itemsets as it scans a database, but it accounts for the itemsets at the end of its scan. New candidate itemsets are generated the same way as with the AIS algorithm, but the transaction ID of the generating transaction is saved with the candidate itemset in a sequential data structure. At the end of the scan, the support count of candidate itemsets is created by aggregating the sequential structure.

- ECLAT. This name stands for Equivalence Class Clustering and Bottom-up Lattice Traversal. The ECLAT algorithm is a version of the Apriori algorithm that explores complex classes of itemsets first and then repeatedly boils them down and simplifies them.

- FP-growth. FP stands for frequent pattern. The FP-growth algorithm uses a tree structure, called an FP-tree, to map out relationships between individual items to find the most frequently recurring patterns.

There are certain caveats to note when using such algorithms, however. For example, the downside of both the Apriori and SETM algorithms is that each one can generate and count many small candidate itemsets, according to Dr. Saed Sayad, author of Real Time Data Mining.

Examples of association rules in data mining

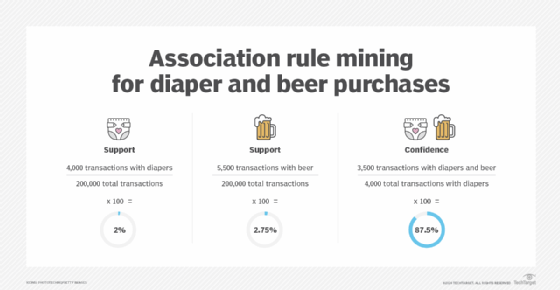

A classic example of association rule mining refers to a relationship between diapers and beer. The example, which seems fictional, claims that men who go to a store to buy diapers are also likely to buy beer. The association rule for this example is expressed as a percentage, indicating the likelihood a customer buys beer and diapers together. Data that points to that might look like this:

- A supermarket has 200,000 customer transactions in a week.

- Of the total number of transactions, 4,000, or 2%, include the purchase of diapers.

- 5,500 transactions, or 2.75%, include the purchase of beer.

- 3,500 transactions, or 1.75%, include both the purchase of beer and diapers.

- Since beer is included in 87.5% of diaper purchases, that indicates a link between diapers and beer.

History of association rules

Association rule mining was defined in the 1990s when computer scientists Rakesh Agrawal, Tomasz Imieliński and Arun Swami used an algorithm to find relationships between items based on data from point-of-sale systems. Applying the algorithms to supermarkets, the three researchers discovered links between different items purchased and coined the term association rules, referring to information that helps predict the likelihood of different products being purchased together. The concepts behind association rules can be traced back earlier than this work.

For retailers, association rule mining offered a way to better understand customer purchase behaviors. Because of its retail origins, association rule mining is often referred to as market basket analysis.

With advances in data science, AI and machine learning, along with the generation of a lot more data, association rules have been extended to more use cases. AI and machine learning provide larger and more complex data sets to be analyzed and mined for association rules.

Machine learning models involve many techniques, processes and practices in order to run successfully. Learn how machine learning models are created and used.