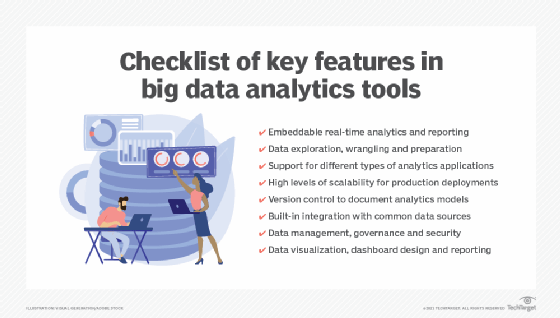

12 must-have features for big data analytics tools

Searching for a big data analytics tool for your organization? Here are 12 key features to look for during the software evaluation and selection process.

Big data analytics is a complex process that can involve data scientists, data engineers, business users, developers and data management teams. Crafting data analytics models is just one part of the process, and big data analytics tools must include a variety of features to fully meet user needs.

For example, adopting the right tools can reduce the burden of pulling together data sets from cloud object storage services or Hadoop, NoSQL databases and other big data platforms for analysis. The right big data technology can also improve the user experience in ways that lead to more effective analytics projects and, ultimately, better business decisions.

The following are 12 must-have big data analytics features that can help reduce the effort required by data scientists and other users to produce the desired results:

1. Embeddable results for real-time analytics and reporting

Big data analytics initiatives gain more value for organizations when the insights gleaned from analytics models can help support business decisions being made on the fly, often while business executives and managers are using other applications.

"It is of utmost importance to be able to incorporate these insights into a real-time decision-making process," said Dheeraj Remella, chief product officer at in-memory database provider VoltDB.

Such features should include the ability to create insights in a format that is easily embeddable in decision-making platforms, which should be able to apply them in a real-time stream of data to help drive in-the-moment decisions.

2. Data wrangling and preparation

Data scientists tend to spend a good deal of their time cleaning, labeling and organizing data to prepare it for analytics uses. The data wrangling and preparation process involves seamless integration across disparate data sources, plus steps that include data collection, profiling, cleansing, transformation and validation.

Big data analytics tools must support the full spectrum of data types, protocols and integration scenarios to speed up and simplify these data wrangling steps, said Joe Lichtenberg, director of product and industry marketing for data platforms at InterSystems, a database and healthcare software vendor.

3. Data exploration

Big data analytics frequently involves an ad hoc data discovery and exploration phase. Sometimes considered to be part of data preparation, exploring the underlying data helps data science teams understand the business context of a problem and formulate better analytics questions. Features that help streamline this process can reduce the effort involved in testing new hypotheses about the data to weed out bad ones faster and streamline the discovery of useful connections buried in the data.

In addition, they should make it easier to collaborate on a data set with colleagues. "Tools must support exploration and collaboration, enabling people of all skill levels to look rapidly at data from multiple perspectives," said Andy Cotgreave, technical evangelist at BI and analytics vendor Tableau.

Strong data visualization capabilities can also help in the data exploration process; sometimes, it's difficult even for data scientists to surface useful insights about a data set without first visualizing the data.

4. Support for different types of analytics

There are a wide variety of approaches for putting big data analytics techniques into production use, from basic BI applications to predictive analytics, real-time analytics, machine learning and other forms of advanced analytics. Each approach provides different kinds of business value. Good big data analytics tools should be functional and flexible enough to support these different use cases with minimal effort and without the retraining that often might be required when adopting separate tools.

5. Scalability

Data scientists typically have the luxury of developing and testing different analytics models on small data sets for long durations. But the predictive and machine learning models that result from those efforts need to run economically and often must deliver results quickly. This requires that big data analytics systems support high levels of scalability for ingesting data and working with large data sets in production without exorbitant hardware or cloud services costs.

"A tool that scales an algorithm from small data sets to large with minimal effort is also critical," said Eduardo Franco, business leader for market forecasting at geospatial analytics vendor Descartes Labs. "So much time and effort is spent in making this transition, so automating this is a huge help."

6. Version control

In a big data analytics project, several data scientists and other users may be involved in adjusting the parameters of analytics models. Some of the changes that are made may initially look promising, but they can create unexpected problems when tested further or pushed into production.

Version control features built into big data analytics tools can improve the ability to track these changes. If problems do emerge later, they can also make it easier to roll back an analytics model to a previous version that worked better.

"Without version control, one change made by a single developer can result in a breakdown of all that was already created," said Charles Amick, vice president of presales engineering and former head of data at Devo Technology, a security logging and analytics platform provider.

7. Simple data integration

The less time data scientists and developers spend customizing integrations to connect analytics systems to data sources and business applications, the more time they can spend improving, deploying and running analytics models.

Simple data integration and access capabilities also make it easier to share analytics results with other users. Big data analytics tools should provide built-in connectors and development toolkits for easy integration with existing databases, data warehouses, data lakes and applications -- both on premises and in the cloud.

8. Data management

Big data analytics tools need a robust yet efficient data management platform as a foundation to ensure continuity and standardization across all deliverables, said Tim Lafferty, director of data science at analytics consultancy Abisam Solutions. As the magnitude of data increases in big data environments, often so does its variability. Data sets may include large numbers of inconsistencies and different formats that need to be harmonized.

Strong data management features can help an enterprise maintain a single source of truth, which is critical for successful big data initiatives. They can also improve visibility into data sets for users and provide guidance to them. For example, push notification features can proactively alert users about stale data, ongoing maintenance or changes to data definitions.

9. Data governance

Data governance features are also important in big data analytics tools to help enterprises implement internal data standards and comply with data privacy and security laws. This includes being able to track the sources and characteristics of the data sets used to build analytics models, which helps ensure that data is used properly by data scientists, data engineers and others, as well as identifying hidden biases in the data sets that could skew analytics results.

Effective data governance is especially crucial for sensitive data, such as protected health information and personally identifiable information that is subject to privacy regulations. For example, some tools now include the ability to anonymize data, allowing data scientists to build models based on personal information in compliance with regulations like GDPR and CCPA.

10. Support for data processing frameworks

Many big data platforms focus on either analytics or data processing. Some frameworks -- like Apache Spark -- support both, which enables data scientists and others to use the same platform for real-time stream processing; complex extract, transform and load tasks; machine learning; and programming in SQL, Python, R and other languages.

Big data analytics tools need to have ties to various processing engines that can help organizations build data pipelines to support the development, training and implementation of analytics models. This is important because data science is a highly iterative process. A data scientist might create 100 models before arriving at one that is put into production, an undertaking that often involves enriching the data to improve the results of the models.

11. Data security

Excessive data security can discourage engagement with analytics data. But big data analytics tools that include well-designed security features can address IT concerns about data breaches while also encouraging appropriate data usage. Getting that balance right is critical in building a data culture and truly becoming a data-driven organization.

Achieving this could involve providing role-based access to sets of big data and other granular security controls. Also, features that help flag personal information can make it easier to process and share data in ways that are compliant with GDPR, CCPA and other privacy regulations.

12. Data visualization, dashboard design and reporting

Ultimately, data scientists and analysts need to communicate the results of big data analytics applications to business executives and workers. To do so, they need integrated tools for creating data visualizations, dashboards and reports, along with capabilities for managing the data visualization and dashboard design process.

For example, numerous visualization techniques can be applied to a data set, but the information must be presented in ways that are understandable to business users. Also, too many visualizations can clog a dashboard and "be overwhelming to users who are quickly looking for information," said Ashley Howard Neville, a senior marketing evangelist at Tableau.

Features are available that enable visualization and dashboard designers to provide more information and add context as required. Examples include the ability to add tooltip overlays with additional data or visualizations, and options to show or hide navigation buttons, filters and other design elements.