Nmedia - Fotolia

Event streaming technologies a remedy for big data's onslaught

Event streaming is emerging as a viable method to quickly analyze in real time the torrents of information pouring into collection systems from multiple data sources.

As businesses depend more and more on AI and analytics to make critical decisions faster, big data streaming -- including event streaming technologies -- is emerging as the best way to quickly analyze information in real time. When deploying and managing these streaming platforms, many hurdles may need to be overcome, but the tools for developing and deploying streaming applications are improving.

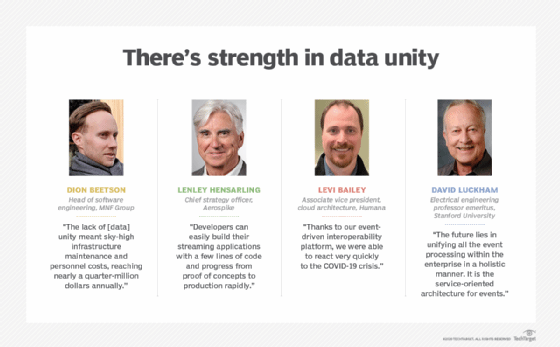

"Streaming platforms have evolved from being just a mere message bus connecting disparate systems to powerful stream processing engines that support complex real-time AI and machine learning use cases, such as personalized offers, real-time alerts, fraud detection and predictive maintenance," said Lenley Hensarling, chief strategy officer at NoSQL platform provider Aerospike.

Leading tools such as Kafka, Flink and Spark streaming and services like Amazon Kinesis Data Streams are leading the charge in providing APIs for complex event processing in a real-time manner. "Developers can easily build their streaming applications with a few lines of code," Hensarling explained, "and progress from proof of concepts to production rapidly."

Hensarling is even seeing big data streaming infrastructures bridging the gap in mainframe applications. "Enterprises are always looking for a way to seamlessly bridge mainframe-based systems to modern services that are based on streaming technologies such as Spark, Kafka and Flink," he added.

Streaming analytics can also help reduce data transmission and storage costs by distributing processing across edge computing infrastructures, said Steve Sparano, principal product manager for event stream processing at SAS Institute. Distributed streaming, for example, can ease the implementation of intelligent filtering, pattern matching and model updates at the edge to reduce the lag time between a significant event, such as a machine fault or detected fraud, and the required response. Streaming edge analytics helps technology deployments become more autonomous, so they can make analytically driven decisions without transmitting data to a data center or the cloud where the analytics have traditionally been performed.

Consolidating streams of data

As streaming becomes more commonplace, enterprises are starting to grapple with ways to integrate multiple streaming platforms. MNF Group, a telecommunications software and service provider in Australia, just undertook a major digital transformation effort to unify different streaming applications that evolved across business units and acquisitions. The company provides telco as a service built on Apache Kafka to process more than 16 million call records per day. The big data streaming architecture maintains MNF's operations support system and business support system (OSS/BSS) platforms that enable critical business functions and real-time analysis.

Four Kafka implementations were each running different Kafka versions, resulting in disjointed operations. "The lack of unity meant sky-high infrastructure maintenance and personnel costs, reaching nearly a quarter-million dollars annually," said Dion Beetson, MNF's head of software engineering. Maintenance also absorbed 80% of his team's efforts, which hindered progress on new projects.

The goal was to unify the platforms into a singular and efficient Apache Kafka deployment in the AWS Cloud. MNF used Kubernetes and a services-based architecture, with each application broken down into a single set of services communicating through Kafka. The company also replaced its on-premises data warehouse with a new cloud data warehouse to more elegantly consume data from Kafka topics, and it selected Instaclustr as its managed Kafka service provider.

The unified Kafka deployment, Beetson reported, now provides a single integrated OSS/BSS platform in the cloud that has dramatically reduced operational costs and freed his team to work on new projects.

Overcoming interoperability issues

Humana is using event streaming technologies to solve interoperability challenges in healthcare. The traditional approach for exchanging data using point-to-point methods was limiting Humana's ability to integrate apps.

"When we think of a better healthcare ecosystem," said Levi Bailey, Humana's associate vice president of cloud architecture, "we really need to think about the opportunity to exchange data in a seamless way, where all participants in the ecosystem can freely exchange and integrate data to help drive optimal outcomes and experiences within their organizations. In order to achieve that, we have to be fully event-driven."

Where it all began

Big data streaming architectures deployed today have their roots in complex event processing at Stanford University in the early 1990s. "Today, many businesses plan strategic initiatives under titles such as 'business analytics' and 'optimization,'" said Stanford electrical engineering professor emeritus David Luckham. "Although they may not know it, complex event processing is usually a cornerstone of such initiatives."

Luckham invented the term complex events to characterize higher-level events correlated from a series of lower-level events. His team outlined three core principles: synchronous timing of events, event hierarchies and causation. Luckham believes the application of explicit event abstraction hierarchies and causality are in its early days, and the widespread adoption of big data event streaming technologies will lead to holistic event processing.

"Event-driven programming and edge computing are scratching the service," Luckham noted. "The future lies in unifying all the event processing within the enterprise in a holistic manner. It is the service-oriented architecture for events."

The health insurance company, based in Louisville, Ky., uses a variety of specialized technologies to solve the interoperability challenges in healthcare, "but the engine really running the exchange of data is Kafka and Confluent," Bailey explained. The interoperability platform also uses IBM API Connect to manage and administrate the deployed APIs, as well as Google Cloud services like App Engine, Compute Engine and Kubernetes Engine.

"Thanks to our event-driven interoperability platform, we were able to react very quickly to the COVID-19 crisis," Bailey said. To help respond to the pandemic, Humana generated analytical insights by collecting data from its CRM systems and call centers, as well as from different agencies and organizations via its reusable interoperability platform.

Low-latency analytics

One of the trends in big data event streaming technologies is reducing the latency in event processing. "The more we move toward distributed cloud-native application architectures, the higher the potential payout of low-latency analytics in IT operations," said Torsten Volk, managing research director at analyst consultancy Enterprise Management Associates.

Volk believes low latency will lead to more accurate and responsive applications for anomaly detections, trend spotting and root cause analysis. "Capturing and correlating all of these events in near real time," he reasoned, "might provide us with an increase in data resolution that could allow us to predict operational risk or imminent failure at a much earlier stage." Further improvements will make correlation of different data streams easier across the enterprise continuously.

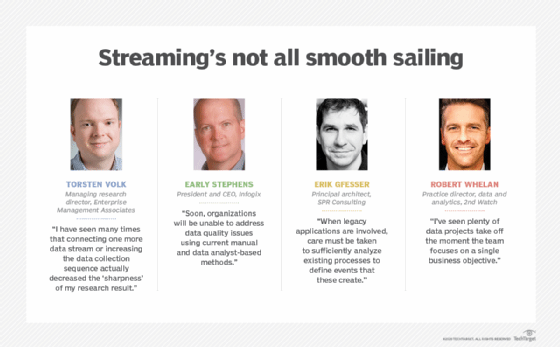

But there are practical tradeoffs in building a more sophisticated infrastructure. "I have seen many times that connecting one more data stream or increasing the data collection sequence actually decreased the 'sharpness' of my research results," Volk said.

It's all about the data

Companies often face operational and technical hurdles in getting big data streaming projects off the ground, said Robert Whelan, practice director, data and analytics at cloud consultancy 2nd Watch.

On the operational side, it's important to decide what to do with the data in addition to formatting and reshaping it. On the technical side, enterprises must decide how much data to retain in a "hot" location. "This decision is important because it can get costly very fast with a lot of unused data piling up," Whelan said. "I've seen plenty of data projects take off the moment the team focuses on a single business objective."

Streaming applications can potentially amplify problems with data quality. "As data volumes grow, so do data quality errors," said Early Stephens, president and CEO of data controls and analytics software maker Infogix. "Soon, organizations will be unable to address data quality issues using current manual and data analyst-based methods." He recommended several ways to scale streaming analytics quality:

- Establish data quality rules at the source.

- Conduct in-line checks to guarantee that the data complies with standards and is complete.

- Detect and delete duplicate messages.

- Verify the timely arrival of all messages.

- Reconcile and validate all messages between producers and consumers to ensure that data has not been altered, lost or corrupted.

- Conduct data quality checks to confirm expected data quality levels, completeness and conformity.

- Monitor data streams for expected message volumes and set thresholds.

- Establish workflows to route potential issues for investigation and resolution.

- Monitor timeliness to identify issues and ensure service-level agreement compliance.

Developing new ways of thinking

When defined effectively, real-time streaming can be the backbone of an entire enterprise ecosystem, providing a single source from which to draw data, especially for cases in which microservice architectures are being built.

But developers working on big data streaming applications will need to embrace a new paradigm. Ostensibly, tools like Apache Kafka and Amazon Kinesis can be processes that are similar to data tables, said Erik Gfesser, principal architect at SPR Consulting. But the data in such tables is defined in the context of windows based on varying criteria. For example, a use case that only considers data within a certain time constraint will define such tables differently than a streaming use case that permits consideration of straggling events. "Understanding these concepts can be challenging for some traditional data practitioners," Gfesser said.

In addition, the concept of an "event" may take some development teams time to digest, especially with the way events might be defined for scenarios currently processed by legacy applications. And greater capabilities and flexibility can typically demand more development.

Projects can get more challenging when development teams try to calibrate the handoffs between streaming platforms and legacy systems. "When legacy applications are involved," Gfesser added, "care must be taken to sufficiently analyze existing processes to define events that these create."